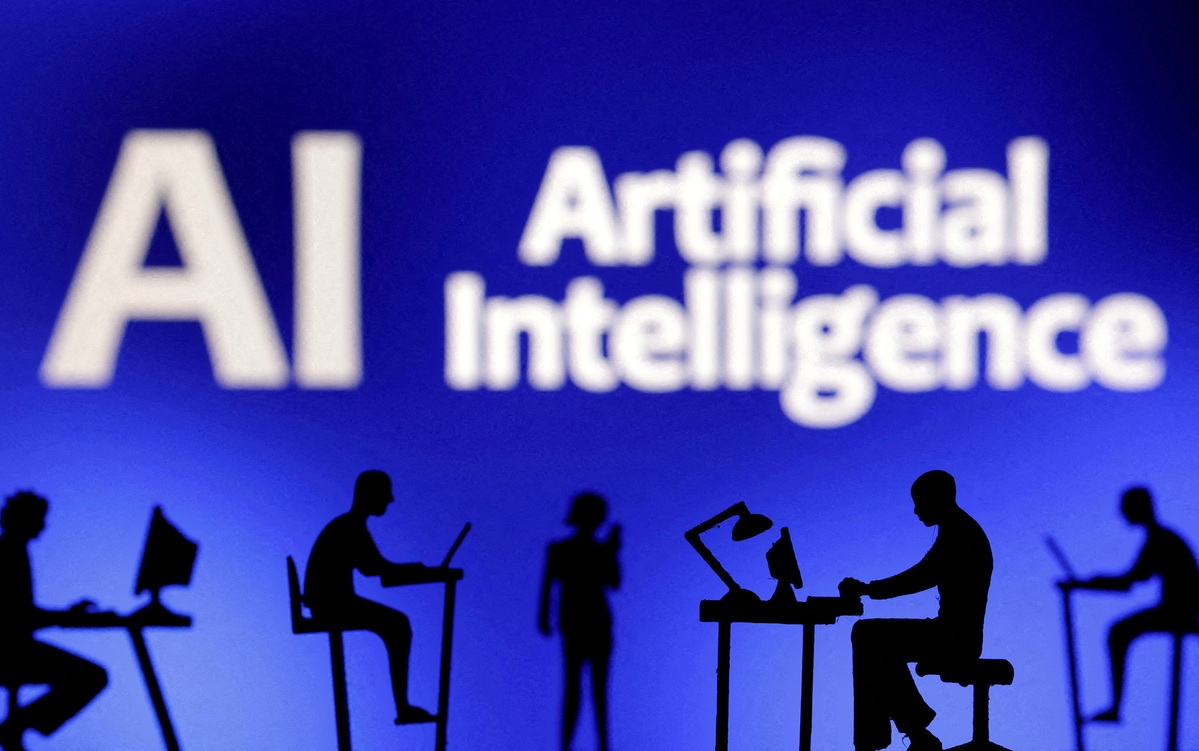

Strong AI security firewall of legal protections must be built

The generative artificial intelligence services developed by Chinese AI startup DeepSeek have recently attracted extensive attention, but the escalated intensity of attacks on DeepSeek online services have also resulted in service interruption, data leakage and other security risks, highlighting the need to consider how to provide a safer artificial intelligence industry environment through legal protection.

Personal information security, fake information and algorithm discrimination are problems that have emerged in the development of AI technology. In response to such problems, China has issued laws and regulations, such as the Regulations on the Administration of Internet Information Service Recommendation Algorithms, to improve and optimize the legality of AI applications.

Interim measures for the management of generative artificial intelligence services were rolled out in July 2023 clearly supporting collaboration among industry organizations, enterprises, educational and research institutions, public cultural institutions, and relevant professional institutions collaborating on generative artificial intelligence technology innovation, data resource construction, transformation and application, and risk prevention.

At the same time, the country is committed to promoting the orderly opening of public data classification and the expansion of high-quality public data resources. These specific measures are of great significance for resolving potential technological security risks in the field of AI.

On the one hand, the country should continue to refine the legal obligations of AI product developers, service providers and users, and the way they assume responsibility. A high level of intelligence presented by generative AI services and products, such as the high-quality text editing function shown by ChatGPT, and the efficient generation of code writing, video production and other fields, is posing severe challenges to the governance of network information content. Once applied in grey network areas, AI could pose a great threat to the personal safety and property safety of citizens. Therefore, the authorities should make great efforts to standardize the technological innovation behavior of developers and service providers, and guide the sound development of the sector by legalizing governance measures such as pre-event security risk assessment and mandatory algorithm filing.

On the other hand, a fair and just market competition environment for business entities should be created, through the establishment of guarantee clauses to promote the efficient allocation of innovation resources. Given that AI technological innovation cannot be separated from algorithms, data and computing power, the corresponding legal protection system should be centered on these three elements.

The country should improve the independent innovation ability of its algorithm models based on basic scientific and technological innovation, the transformation of scientific and technological achievements, and personnel training. It should promote the diversification of data acquisition methods to fully protect the equal development right of small and medium-sized enterprises to obtain data. And a computing resource supply mechanism, including computing network, resource scheduling planning, and service trading, should also be constructed.